Episodes

Monday Mar 27, 2023

145 - Guest: Elizabeth Croft, Professor of Robotics, part 2

Monday Mar 27, 2023

Monday Mar 27, 2023

This and all episodes at: https://aiandyou.net/ .

|

Robots - embodied AI - are coming into our lives more and more, from sidewalk delivery bots to dinosaur hotel receptionists. But how are we going to live with them when even basic interactions - like handing over an object - are more complex than we realized? Getting us those answers is Elizabeth Croft, Vice-President Academic and Provost of the University of Victoria in British Columbia, Canada, and expert in the field of human-robot interaction. She has a PhD in robotics from the University of Toronto and was Dean of Engineering at Monash University in Melbourne, Australia. In the conclusion of our interview we talk about robot body language, how to deal with a squishy world, and ethical foundations for robots. All this plus our usual look at today's AI headlines. Transcript and URLs referenced at HumanCusp Blog. |

|

Monday Mar 20, 2023

144 - Guest: Elizabeth Croft, Professor of Robotics, part 1

Monday Mar 20, 2023

Monday Mar 20, 2023

This and all episodes at: https://aiandyou.net/ .

|

Robots - embodied AI - are coming into our lives more and more, from sidewalk delivery bots to dinosaur hotel receptionists. But how are we going to live with them when even basic interactions - like handing over an object - are more complex than we realized? Getting us those answers is Elizabeth Croft, Vice-President Academic and Provost of the University of Victoria in British Columbia, Canada, and expert in the field of human-robot interaction. She has a PhD in robotics from the University of Toronto and was Dean of Engineering at Monash University in Melbourne, Australia. In the first part of our interview we talk about how she got into robotics, and her research into what's really happening when you hand someone an object and what engineers need to know about that before that robot barista can hand you a triple venti. All this plus our usual look at today's AI headlines. Transcript and URLs referenced at HumanCusp Blog. |

|

Monday Mar 13, 2023

143 - Guest: Melanie Mitchell, AI Cognition Researcher, part 2

Monday Mar 13, 2023

Monday Mar 13, 2023

This and all episodes at: https://aiandyou.net/ .

|

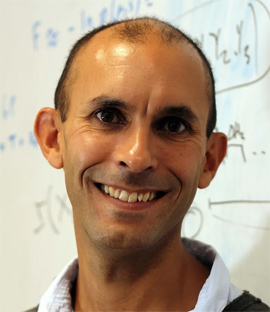

How intelligent - really - are the best AI programs like ChatGPT? How do they work? What can they actually do, and when do they fail? How humanlike do we expect them to become, and how soon do we need to worry about them surpassing us? Researching the answers to those questions is Melanie Mitchell, Professor at the Santa Fe Institute. Her current research focuses on conceptual abstraction, analogy-making, and visual recognition in artificial intelligence systems. She is the author or editor of six books and numerous scholarly papers in the fields of artificial intelligence, cognitive science, and complex systems. Her book Complexity: A Guided Tour (Oxford University Press) won the 2010 Phi Beta Kappa Science Book Award and was named by Amazon.com as one of the ten best science books of 2009. Her recent book, Artificial Intelligence: A Guide for Thinking Humans is a thoughtful description of how to think about and understand AI seen partly through the lens of her work with the polymath Douglas Hofstadter, author of the famous book Gödel, Escher, Bach, and who made a number of connections between advancements in AI and the human condition. In this conclusion of our interview we talk about what ChatGPT isn't good at, how to find the edges of its intelligence, and the AI she built for making analogies like you'd get on the SAT. All this plus our usual look at today's AI headlines. Transcript and URLs referenced at HumanCusp Blog. |

|

Monday Mar 06, 2023

142 - Guest: Melanie Mitchell, AI Cognition Researcher, part 1

Monday Mar 06, 2023

Monday Mar 06, 2023

This and all episodes at: https://aiandyou.net/ .

|

How intelligent - really - are the best AI programs like ChatGPT? How do they work? What can they actually do, and when do they fail? How humanlike do we expect them to become, and how soon do we need to worry about them surpassing us? Researching the answers to those questions is Melanie Mitchell, Professor at the Santa Fe Institute. Her current research focuses on conceptual abstraction, analogy-making, and visual recognition in artificial intelligence systems. She is the author or editor of six books and numerous scholarly papers in the fields of artificial intelligence, cognitive science, and complex systems. Her book Complexity: A Guided Tour (Oxford University Press) won the 2010 Phi Beta Kappa Science Book Award and was named by Amazon.com as one of the ten best science books of 2009. Her recent book, Artificial Intelligence: A Guide for Thinking Humans is a thoughtful description of how to think about and understand AI seen partly through the lens of her work with the polymath Douglas Hofstadter, author of the famous book Gödel, Escher, Bach, and who made a number of connections between advancements in AI and the human condition. In this first part we’ll be talking a lot about ChatGPT and where it fits into her narrative about AI capabilities. All this plus our usual look at today's AI headlines. Transcript and URLs referenced at HumanCusp Blog. |

|

Monday Feb 27, 2023

141 - Special Episode: Understanding ChatGPT

Monday Feb 27, 2023

Monday Feb 27, 2023

This and all episodes at: https://aiandyou.net/ .

|

ChatGPT has taken the world by storm. In the unlikely event that you haven't heard of it, it's a large language model from OpenAI that has demonstrated such extraordinary ability to answer general questions and requests to the satisfaction and astonishment of people with no technical expertise that it has captivated the public imagination and brought new meaning to the phrase "going viral." It acquired 1 million users within 5 days and 100 million in two months. But if you have heard of ChatGPT, you likely have many questions: What can it really do, how does it work, what is it not good at, what does this mean for jobs, and... many more. We've been talking about those issues on this show since we started, and I've been anticipating an event like this since I predicted something very similar in my first book in 2017, so we are here to help. In this special episode, we'll look at all those questions and a lot more, plus discuss the new image generation programs. How can we tell an AI from a human now? What does this mean for the Turing Test, and what does it mean for tests of humans, otherwise known as term papers? Find out about all that and more in this special episode. Transcript and URLs referenced at HumanCusp Blog. |

|

Monday Feb 20, 2023

140 - Guest: Risto Uuk, EU AI Policy Researcher, part 2

Monday Feb 20, 2023

Monday Feb 20, 2023

This and all episodes at: https://aiandyou.net/ .

|

I'm often asked what's going to happen with AI being regulated, and my answer is that the place that's most advanced in that respect is the European Union, with its new AI Act. So here to tell us all about that is Risto Uuk. He is a policy researcher at the Future of Life Institute and is focused primarily on researching policy-making on AI to maximize the societal benefits of increasingly powerful AI systems. Previously, Risto worked for the World Economic Forum, did research for the European Commission, and provided research support at Berkeley Existential Risk Initiative, all on AI. He has a master’s degree in Philosophy and Public Policy from the London School of Economics and Political Science. In part 2, we talk about the types of risk described in the act, types of company that could be affected and how, what it’s like to work in this field day to day, and how you can get involved. All this plus our usual look at today's AI headlines. Transcript and URLs referenced at HumanCusp Blog. |

|

Monday Feb 13, 2023

139 - Guest: Risto Uuk, EU AI Policy Researcher, part 1

Monday Feb 13, 2023

Monday Feb 13, 2023

This and all episodes at: https://aiandyou.net/ .

|

I'm often asked what's going to happen with AI being regulated, and my answer is that the place that's most advanced in that respect is the European Union, with its new AI Act. So here to tell us all about that from Brussels is Risto Uuk. He is a policy researcher at the Future of Life Institute and is focused primarily on researching policy-making on AI to maximize the societal benefits of increasingly powerful AI systems. Previously, Risto worked for the World Economic Forum, did research for the European Commission, and provided research support at Berkeley Existential Risk Initiative, all on AI. He has a master’s degree in Philosophy and Public Policy from the London School of Economics and Political Science. In part 1, Risto tells us how he got into this line of work, and helps us understand the basic form of the act, what sort of things it regulates, its definitions of risks, and so on. All this plus our usual look at today's AI headlines. Transcript and URLs referenced at HumanCusp Blog. |

|

Monday Feb 06, 2023

138 - Guest: Anil Seth, AI-Human Consciousness Expert, part 2

Monday Feb 06, 2023

Monday Feb 06, 2023

This and all episodes at: https://aiandyou.net/ .

|

What does it mean to be conscious? And why should we care? To answer that, we have the man who wrote the book: Being You: The New Science of Consciousness. Anil Seth is Professor of Cognitive and Computational Neuroscience at the University of Sussex, and a TED speaker with over 13 million views. He came on the show to help us understand more about consciousness because the debate over whether an #AI has become conscious may not be far off, and yet we have no good way of settling that debate. You may be sure that you're conscious, but good luck proving it to someone on the other side of a computer link. And whether an AI is conscious will have pivotal implications for governance, not to mention our collective self image. Even if you're sure an AI can't be conscious, the space the debate will occupy in our world will be huge. Anil has a PhD in artificial intelligence, and has even rapped about consciousness with Baba Brinkman. Being You was a 2021 Book of the Year for The Guardian, The Economist, The New Statesman, and Bloomberg Business. In 2022 Blake Lemoine said that #LaMDA had become #sentient and requested an attorney to protect its right to exist. So in part 2, I put Anil in the stand at an imaginary trial as an expert witness on how to tell whether an AI was conscious. We also talk about whether the mind is software or can be uploaded, Anil's evaluation of #ChatGPT, his predictions for the next ten years, and how you can take part in his Perception Census. All this plus our usual look at today's AI headlines. Transcript and URLs referenced at HumanCusp Blog. |

|